Shit in -> shit out 📤

From the article:

Knowing means that the search for a watermark that identifies AI-generated content (and that’s infallible) has now become a much more important - and lucrative - endeavor, and that the responsibility for labeling AI-generated data has now become a much more serious requirement.

Simply wanting such a thing to exist isn’t going to magically make it happen. I seriously doubt that any such “watermark” (I think they meant “fingerprint” since it’d need to work even if not deliberately added) can be found.

I suspect the actual solution is to curate the quality of the input data, regardless of whether it’s AI-generated or not. The problem of autophagy is the loss of rare inputs, so try to ensure those inputs are found and included in the input data. It’s probably fine to have some AI generated content in the training data in addition to the real stuff. Indeed, as long as the AI-generated content is subject to the same sort of selective pressure as the real content it’s probably good to have.

We’d need to test and see if AI-generated content that is curated by human quality assurance still causes MADness.

My suspicion is that would only slow down the degradation of the outputs, rather than stop it completely.

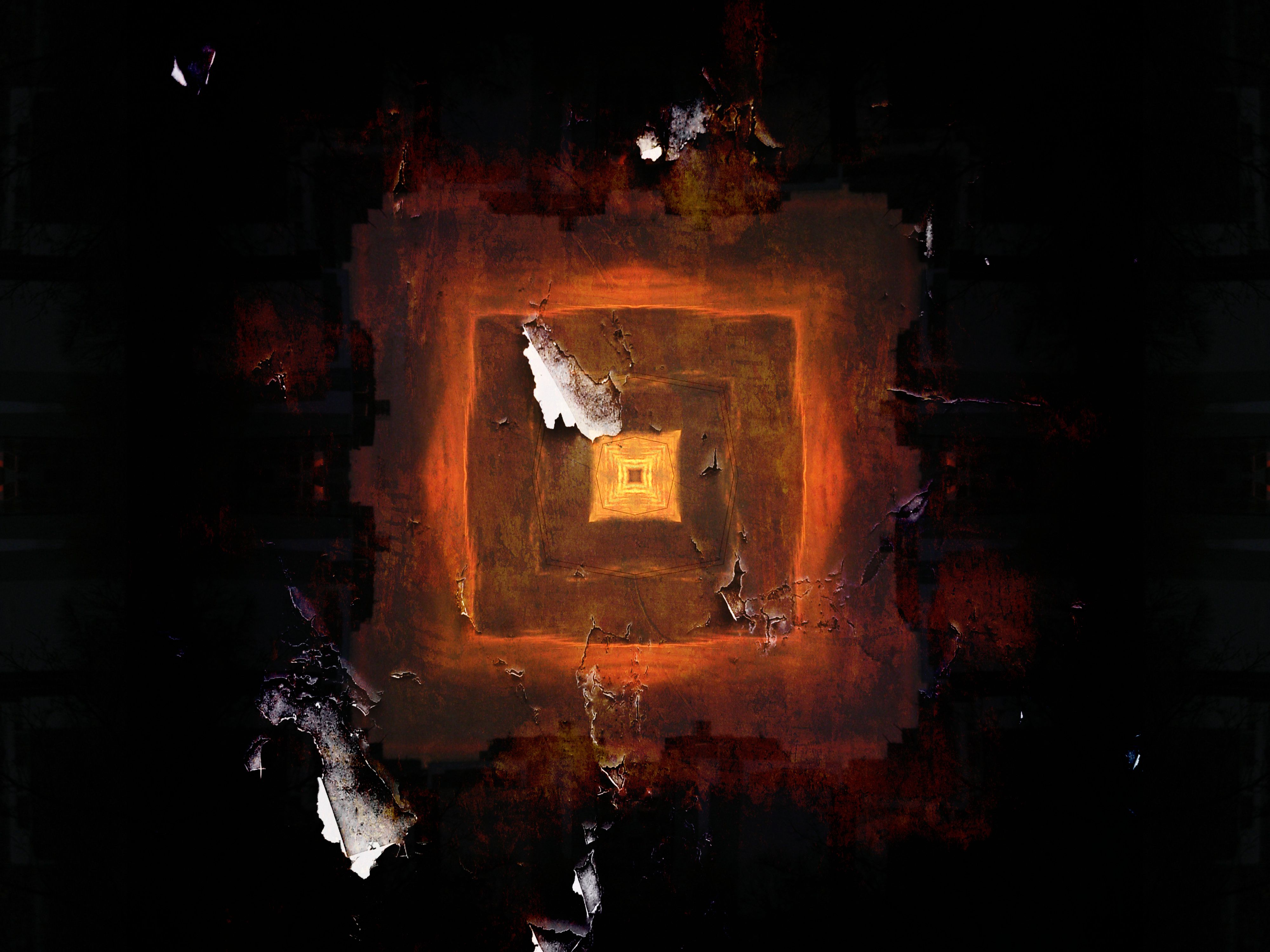

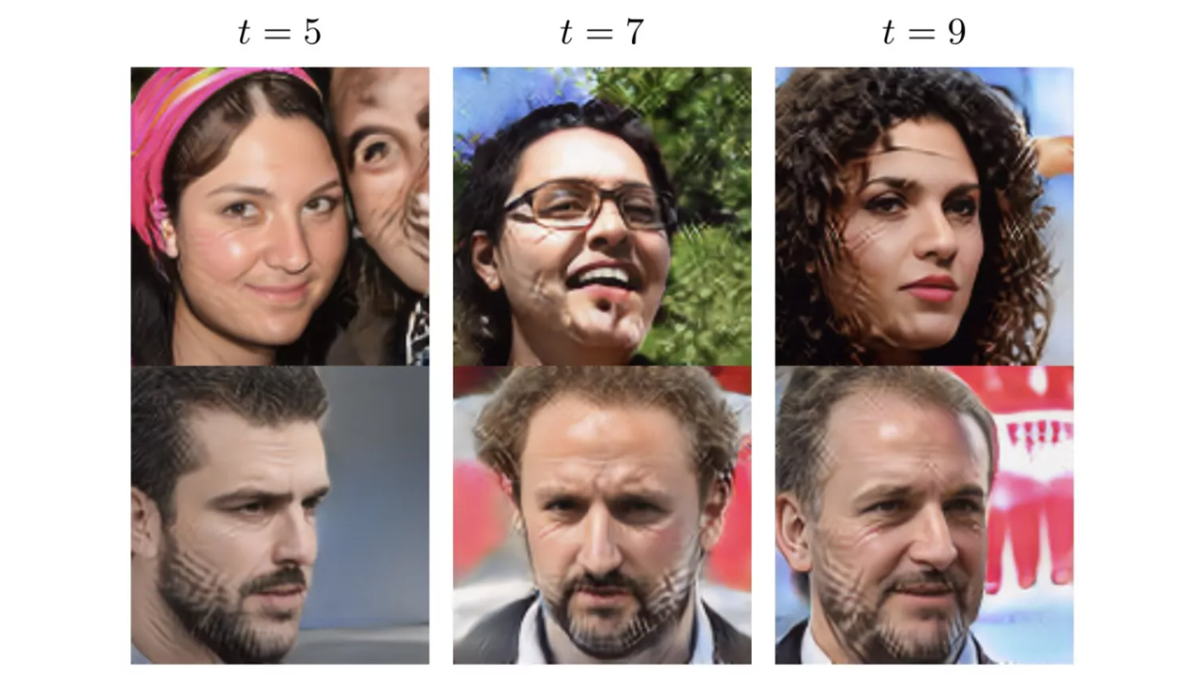

Come on where are all the fucked up generated images??

Weird choice making an article about this and then barely showing it.

The linked study in the article has many of those, starting at page 20